SUBTITLE

What happens if you are liquidated – financially, socially, politically, subject to climate-induced flooding? Can AI tell the stories that humans can not?

TECH STACK

COLLABORATORS

## Project Overview

In collaboration for Jen's work as an AI technologist, I was uniquely positioned to share the narratives of labor workers, activists, and feminists who faced unethical working environments, resulting in detrimental physical and mental health consequences, or who fearlessly voiced their concerns, only to be silenced by political or social liquidation. When Jen first handed me her years of research, I was shocked by my own ignorance and the vast scope of the project. In retrospect, the summer of 2022, when we started our \Art project, was quite peculiar. Everyone was 'generating' a profile picture or something with AI, with the rise of Diffusion Models and tools like midjourney and Dall-E 2. As we grapple with the question of what AI's generations mean for human creative works, their responsibilities, authenticity, worth, etc, we started researching text and video generation.

# Video Generation with AI Our exploration began with Diffusion Models as well, aiming to generate video segments that AI would later distort and expand. I remember explaining the process to Jen: how the model starts with a page of Gaussian noise and gradually refines the image based on the prompt, layer by layer, until a clear picture emerges during the generation process. Jen pointed out the parallels between this process and how we are trying to build something from the 'liquidated' things. For me, the murky underwater footage of flooded areas visually echoed the initial noise state where these models begin. So murky, where do we go from there? Although these initial experiments didn't make it to the final work due to limitations in control and cultural inclusivity, they lead us to deeper exploration of AI's potential in storytelling. I used Latent Diffusion Model to generate each frame of the video as an image and applied FILM: Frame Interpolation for Large Motion(research from Washington University) to interpolate between those generated frames for smoother transitions. Works archived in this github repo.

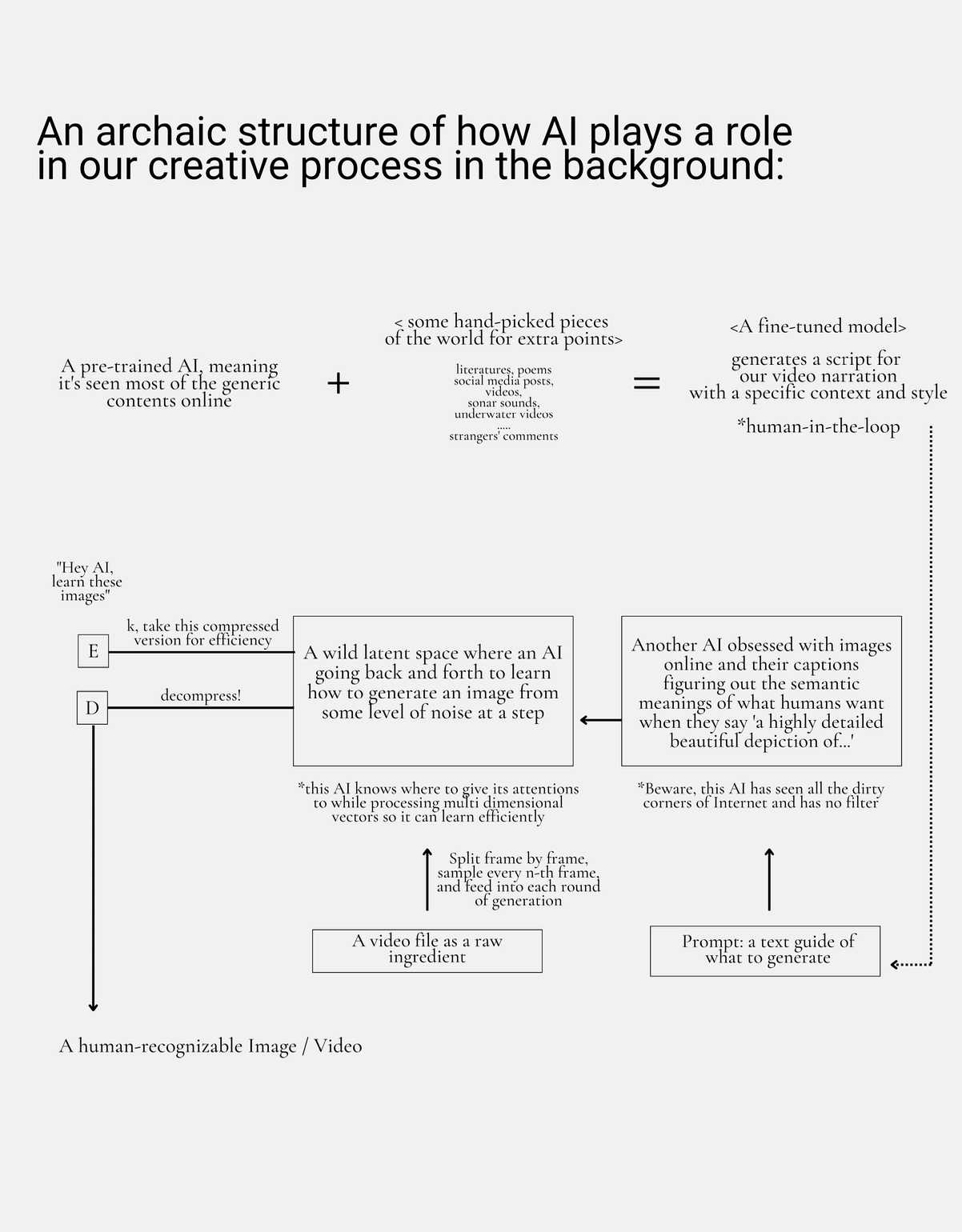

### Text Generation with AI Concurrently, we kept ideating how to deliver their stories with AI. What about text? Important to note that this was the pre-ChatGPT era. I fine-tuned models like Recurrent Neural Networks (RNNs), particularly Long Short-Term Memory (LSTM), BERT, and GPT-2 on diverse texts, from social media posts to Chinese literature and corporate announcements. LSTMs struggled with the complex relationships in the diverse corpus, resulting in inconsistent texts. BERT's bidirectional context awareness improved consistency, but coherence remained elusive. GPT-2(it was open-sourced and fine-tunable on our machines unlike its successor GPT-3.5 and forth) was the most promising. Pre-trained models indeed performed better in natural generation, indicating that the more it had 'studied' the 'unliquidated' content online, the better it got. I'm still pondering upon AI's reliance on existing, mainstream narratives and its potential to perpetuate the silencing of marginalized voices. The architecture of these archaic text generation methods used in our process is illustrated here (link to image).

# Can AIs tell a story that we dare to tell? Throughout the project, I was driven by the desire to explore AI's potential in telling the stories we dared not tell after the 'liquidations'. And I had to confront the harsh reality that the stories we wanted to tell were already erased from the AI's training data. Out-of-the box generative models were more proof of the liquidation than a protector. This realization hit hard when Diffusion models consistently generated blond, double-eyelid features with red Chinese patterns in the background, instead of accurately representing East Asian womens' features. The same limitations emerged when we tried to incorporate writing styles from Asian literature, highlighting the glaring absence of these voices in the AI's knowledge base. (In the grand scheme of things, I am part of a history that faces the risk of liquidation.)

# Steganography To protect these stories, we are archiving the raw content—videos, texts, and pictures—collected throughout the Pink Slime Caesar Shift via steganography. Each pixel of the video contains information about the real stories, which can be decoded and reconstructed by the viewer. Unlike cryptography, steganography conceals the very fact that something is hidden. Viewers must acknowledge the liquidated stories and access them with a specific algorithm. Through this collaboration with the audience, we attempt to protect the liquidated stories that AI currently cannot tell properly at this time.

<BoldText>Upcoming Exhibition:</BoldText> The Metropolitan Museum, NYC April-June 2025

<BoldText>Past Exhibitions:</BoldText>

<SerifText>Taipei Biennial 2023</SerifText>

<SerifText>Sculpture Center</SerifText>

<SerifText>MAIIAM Chiang Mai 2025</SerifText>

<SerifText>Blindspot Gallery (solo September)</SerifText>